FastAPI

Introduction:

FastAPI is an API framework based on Starlette and Pydantic, heavily inspired by previous server versions of APIStar.

Benefits of FastAPI:

- Allows us to quickly develop api

- On the fly Input data validation

- Support dynamic API documentation using Swagger UI and redocs

- Supports OAuth2, JWT and simple HTTP authentication

- Swagger UI provides testing framework for testing API endpoints

- provides async-io option

Quick Installation

pip install fastapi

Since we need server to host api locally

pip install uvicorn

Example

from fastapi import FastAPI

from pydantic import BaseModel

app=FastAPI()

db=[]

class city(BaseModel):

name: str

time_zone: str

@app.get('/')

def index():

return {'healthcheck':'True'}

@app.get('/cities')

def get_cities():

return db

@app.get('/cities/{city_id}')

def get_city(city_id:int):

return db[city_id-1]

@app.post('/cities')

def create_city(city:city):

db.append(city.dict())

return db[-1]

@app.delete('/cities/{city_id}')

def delete_city(cityid:int):

db.pop(city_id-1)

return {}

Run on the following command on shell or cmd prompt

uvicorn main:app --reload

Default port is 8000 and incase if you want to run on different port then

uvicorn main:app --port <portnumber>

you can also use help option of uvicorn to check other parameters

uvicorn --help

Open the Browser at the address

http://127.0.0.1:8000/

Interactive API Docs

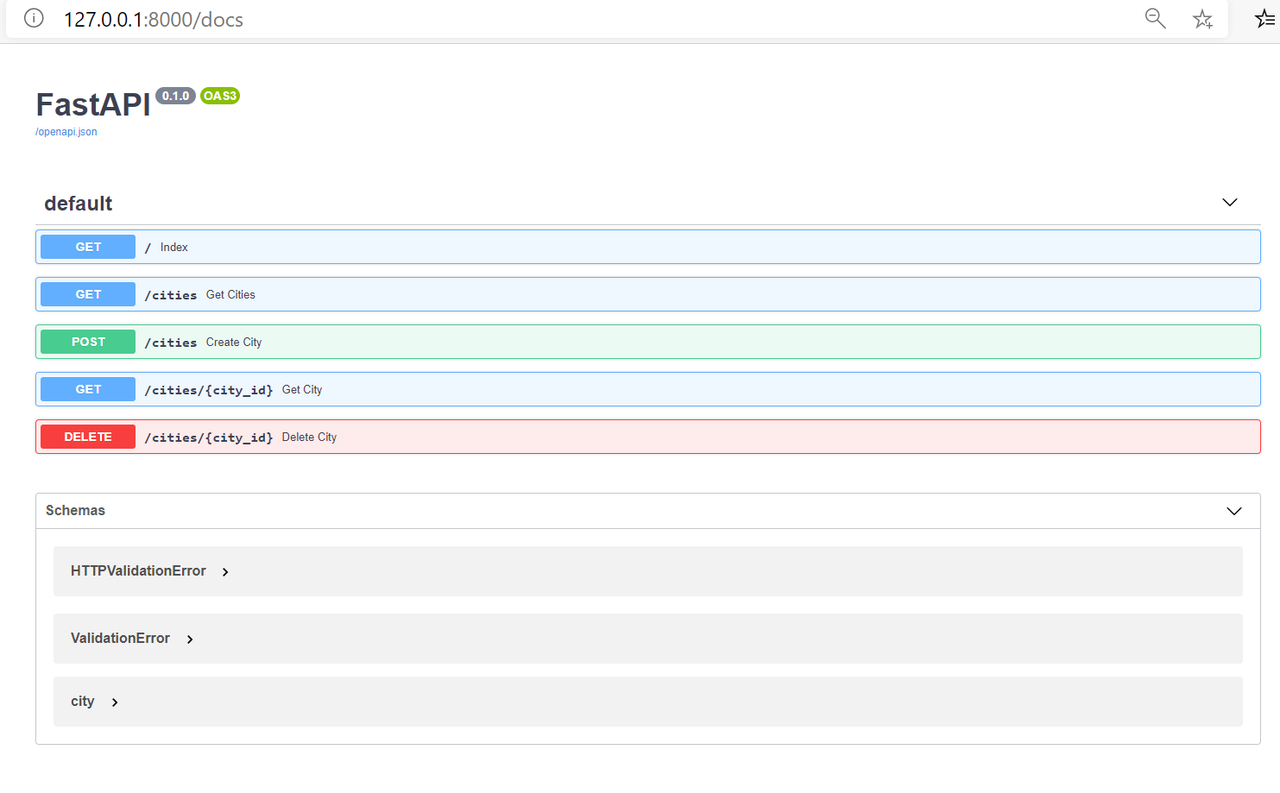

Now go to http://127.0.0.1:8000/docs

You will see the automatic interactive API documentation (provided by Swagger UI)

We can see that there are 5 methods coming on screen.

- GET /Index

- GET /cities Get Cities

- POST /cities Create Cities

- Get /cities/{city_id} Get City

- DELETE /cities{city_id} Delete City

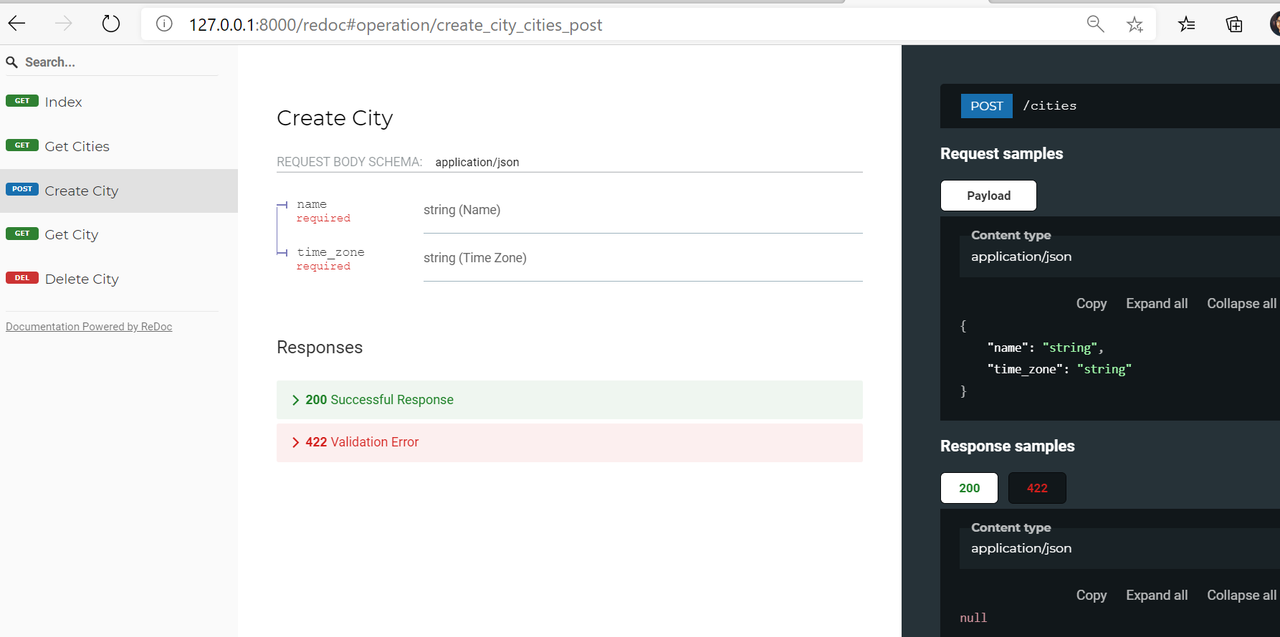

Alternative API docs¶

And now, go to http://127.0.0.1:8000/redoc

We can test above methods to create, get or delete city.

basically all the CRUD operations.

Machine Learning API Example

Let’s Look at machine learning example in which we can wrap ML model into an api to serve predictions online with just few lines of code.

We will use kaggle data HR analytics data and use pickle file of already built model which will help in predicting whether the employee will leave organization or not.

Below is the python code to do the same

import pickle

import numpy as np

from pydantic import BaseModel

from configs import config

from fastapi import FastAPI

import lightgbm

#Instance of FastAPI class

app = FastAPI()

# Load pickle files for features,encoder and model

enc = pickle.load(open(config.enc_pickle, 'rb'))

features = pickle.load(open(config.feature_pickle, 'rb'))

clf = pickle.load(open(config.mod_pickle, 'rb'))

# Declare Input Data-Structure

class Data(BaseModel):

satisfaction_level: float

last_evaluation: float

number_project: float

average_montly_hours: float

time_spend_company: float

Work_accident: float

promotion_last_5years: float

sales: str

salary: str

#set default values for APIDocs to render

class Config:

schema_extra = {

"example": {

"satisfaction_level": 0.38,

"last_evaluation": 0.53,

"number_project": 2,

"average_montly_hours": 157,

"time_spend_company": 3,

"Work_accident": 0,

"promotion_last_5years": 0,

"sales": "support",

"salary": "low"

}

}

@app.post("/predict")

def predict_attrition(data: Data):

# Extract data in correct order

data_dict = data.dict()

to_predict = [data_dict[feature] for feature in features]

# Apply one-hot encoding

encoded_features = list(enc.transform(np.array(to_predict[-2:]).reshape(1, -1))[0])

to_predict = np.array(to_predict[:-2] + encoded_features)

# Create and return prediction

prediction = clf.predict(to_predict.reshape(1, -1))

return {"prediction": int(prediction[0])}

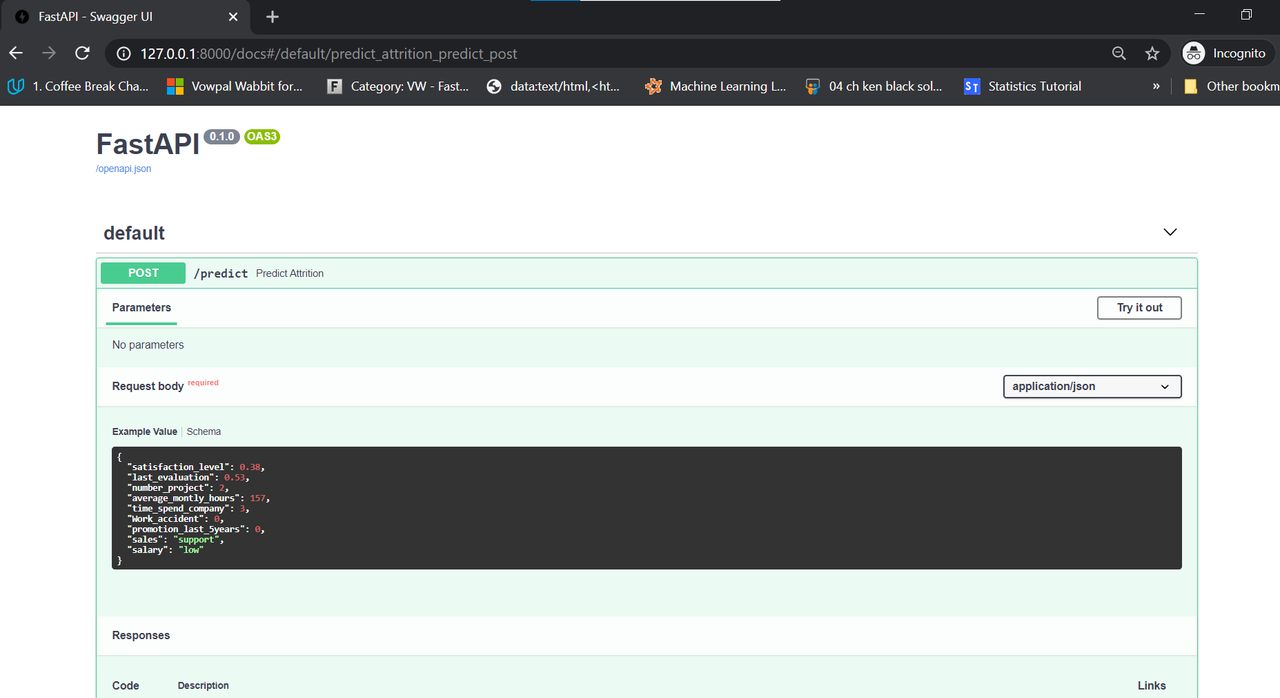

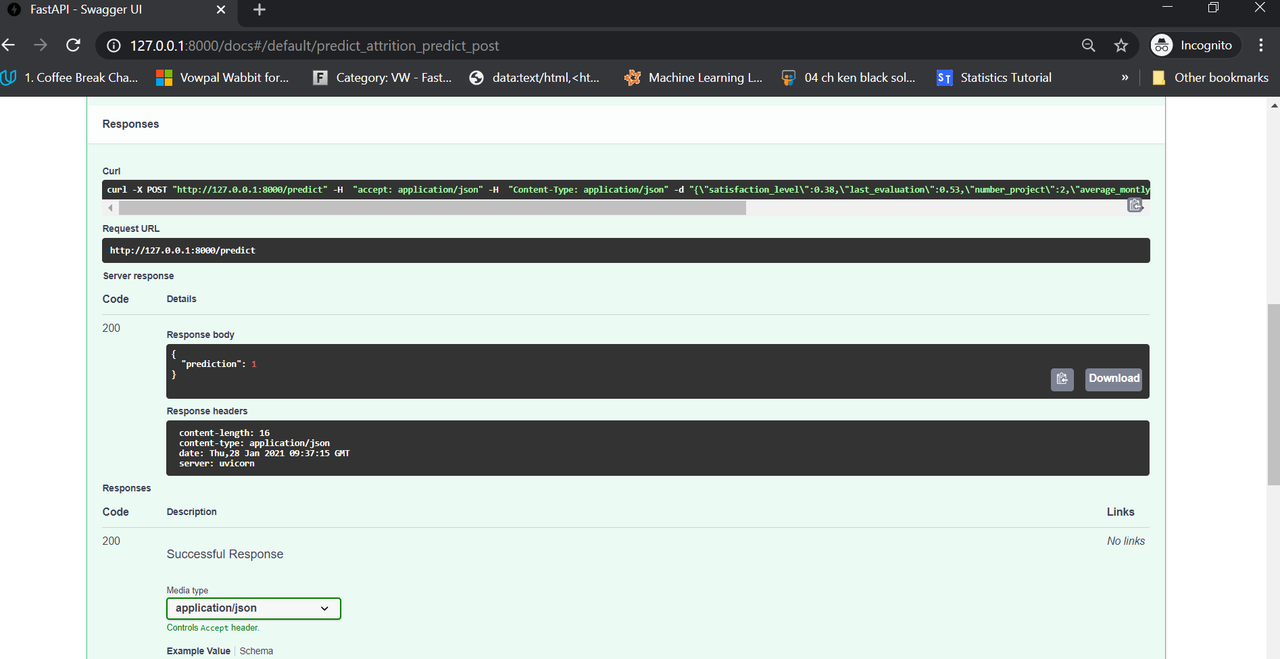

And here is how the FastAPI output will look like after starting the uvicorn server using the following command

uvicorn main:app --reload

and opening the url

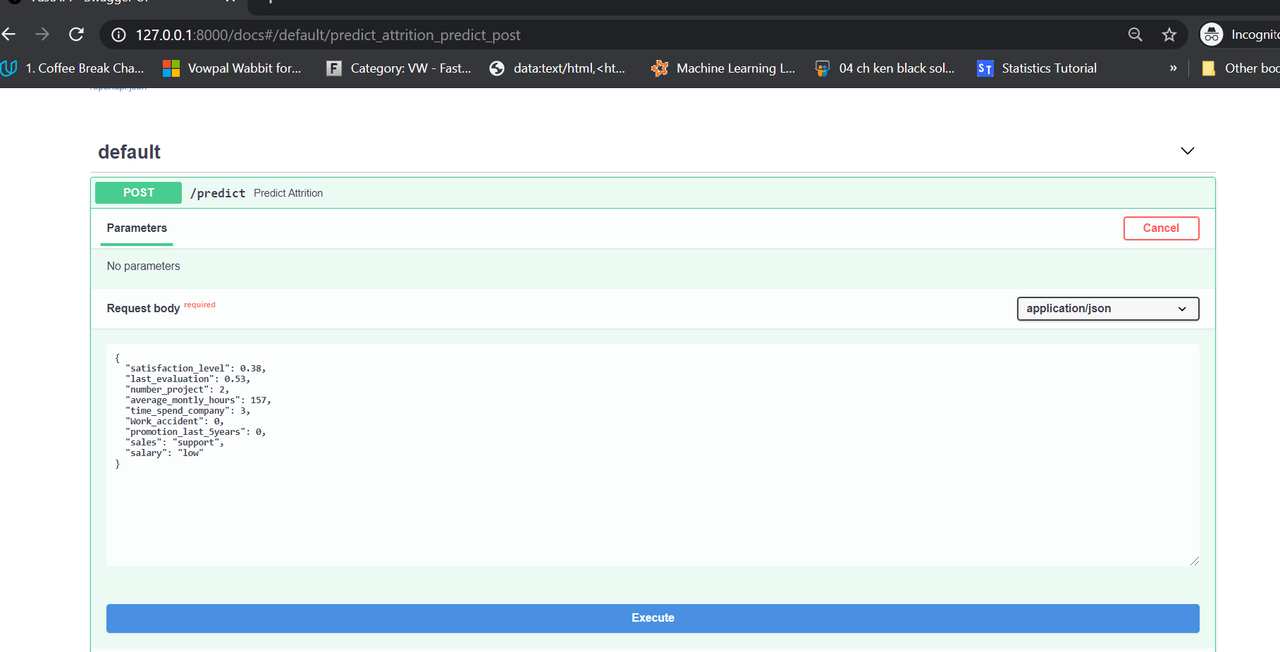

Click on Try it out Button and JSON will be available with sample input values to test the POST method of API

Click on Execute button and we will get the live Predictions from model along-with curl and response body.

We can see that model has predicted that Employee will leave the organization based on the input features passed to the model.

Lastly all we need to do is wrap the API in docker and host it in docker repository in Azure.